AI Governance Failures: Real-World Examples, Risks, and Lessons Learned

Businesses use AI for all sorts of things, from approving loans to recommending medical treatments, filtering job candidates, and controlling their supply chains. According to IBM’s 2025 Cost of a Data Breach report, 97% of businesses are affected by data breaches resulting from an AI failure to effectively implement access control, with the most common reason identified as AI governance failures.

AI governance affects the entire spectrum of businesses within the various industries, like banking, healthcare, e-commerce, retail, logistics, etc. Your company may already be experiencing the negative impact of AI governance. 63% of companies that were hacked either don’t have AI governance policies or are still writing them. Different industries have different impacts.

Healthcare teams deal with biased risks. Banks can be fined by the government. When recommendations fail in public, retailers lose trust. When AI-driven forecasts fail, supply chains fall apart. The root cause of these problems is broken governance.

That’s why the global AI governance market is growing and will reach USD 3.35 billion by 2026. As more and more companies start using AI, leadership teams need to see governance as a business discipline, not just a box to check.

In this blog, we’ll look at real-world examples of AI governance failures, the big risks they pose, and the important lessons that businesses need to learn to avoid similar problems in the future.

What Is AI Governance?

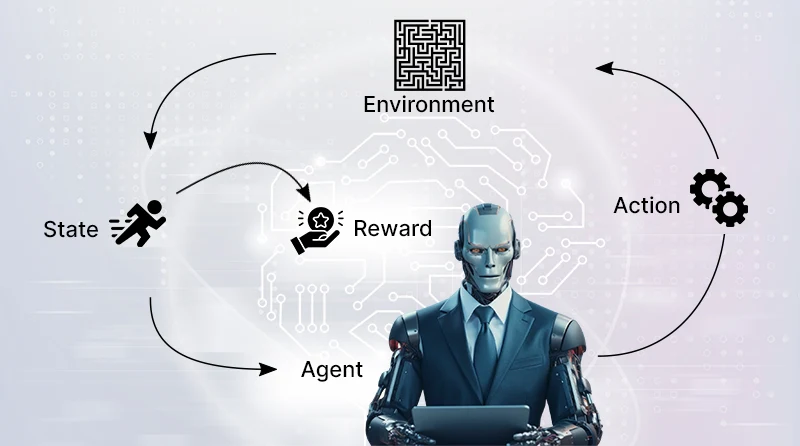

AI governance is the set of rules, policies, and oversight that helps organisations build, use, and keep an eye on AI. In short, it answers four basic questions: who is in charge of the AI, what it can do, how teams keep an eye on it, and what happens when something goes wrong.

AI governance is not a set of rules or a list that you check off once. It is a way of doing things. It brings together data teams, product owners, compliance leaders, and executives around a common goal. AI systems work in silos without it, and once they go into production, it’s not always clear who owns them.

Strong AI governance includes everything that happens with an AI system from start to finish. It starts with design, when teams choose what data to use and what results are okay. AI governance continues as AI is being utilised in the course of daily operations. It provides for ongoing monitoring, auditing, and review of AI on an ongoing basis.

For practical business reasons, businesses should have an effective AI governance framework. If teams don’t pay attention to the quality of the data, AI systems can make bias worse. If privacy controls stay weak, they can show private information. If no one checks their work often, they can make bad or wrong decisions on a large scale. Over time, even well-designed models change as people use them and the environment changes. These risks are making AI governance failures more common in all fields.

AI ethics and governance come together at the point of creating an effective framework. AI governance implements an AI ethics framework, providing companies the opportunity to reduce their risk of an AI-related data breach. It creates opportunities for accountability, protects user trust, and ensures compliance with regulatory and social standards.

Why AI Governance Is Failing Today

A lot of companies talk about AI governance, but problems keep happening. The issue is not a deficiency of awareness. The issue pertains to the design, ownership, and enforcement of governance within actual enterprises.

Ownership breaks once AI reaches production

During development, teams put a lot of money into the system, and then they give it to operations. Over time, no one is responsible for results. When mistakes happen, product teams blame data, data teams blame models, and leadership doesn’t step in until it’s too late. After launch, there are rarely clear AI governance responsibilities.

AI governance monitoring remains weak or reactive

A lot of businesses test models in controlled settings but don’t pay attention to how they change in the real world. People’s behavior changes, patterns in data change, and outputs slowly get worse. Teams only look into things after customers complain or the government gets involved. AI governance monitoring that only works after something bad has happened doesn’t do its job.

Ethical principles don’t translate into daily decisions

Companies write AI ethics statements, but product teams don’t know how to use them. Engineers are under pressure to deliver. Managers put speed first. Instead of being built in, ethical review becomes optional. This disconnect makes AI ethics and governance more about paperwork than doing things.

Leadership underestimates AI risk exposure

Executives often see AI as a way to improve technology rather than a way to make decisions. The governance philosophy makes it difficult for older people to get involved in committees that govern. In their current form, governance committees do not have any authority. Additionally, decisions regarding budget approval do not include the need for long-term monitoring for artificial intelligence. The same intensive oversight applies to the AI governance leadership as to financial and cybersecurity.

Regulation evolves more slowly than AI deployment

It takes a longer time to update laws to keep pace with faster-moving models and use cases for AI. As a result, many organizations are waiting for others to create regulations before they create their own. That delay makes it hard to hold people accountable, keep records, and move things up the chain. Before regulators step in, there must be good AI governance. This reactive way of following the rules is often to blame for AI governance failures.

Bonus Read: Top AI Cloud Business Management Platform Tools

Real-World Examples of AI Governance Failures

AI governance failures happen because companies don’t realize how quickly AI makes mistakes when there aren’t enough rules in place. The examples below show how AI systems that had a lot of potential failed because they didn’t have enough oversight, clear responsibilities, or strong monitoring.

1. Amazon’s Recruiting AI Penalized Women (2014-2017)

Amazon made an AI tool to help them hire people faster by screening job applicants. The system learned from past hiring data that it was better to hire men. The AI learned over time to give lower scores to resumes that had signs of being from women.

Governance failed because there were no structured bias audits after the system was put into use. Teams were more interested in getting things done quickly than in making sure the results were fair. There wasn’t a clear owner who kept an eye on the effects on gender. Amazon found the problem in 2015 but was unable to fix it. In 2017, they scrapped the entire project.

2. COMPAS Algorithm: Racial Bias in Criminal Justice

The COMPAS algorithm was used by courts all over the US to figure out how likely someone was to commit another crime. Judges used its scores to decide on sentences and parole. Later investigations showed that the system was not fair. White defendants who were labeled low-risk were much more likely to commit another crime than Black defendants with the same scores.

The failure of governance was caused by a lack of transparency. The defendants couldn’t question or understand how the model worked. There was no independent oversight of the courts. There was no way to appeal algorithmic mistakes. When AI has a say in decisions that change people’s lives, AI governance failures become civil rights issues, not just technical problems.

3. Healthcare Algorithm Denied Care to Black Patients (2019)

More than 200 million Americans were affected by a healthcare algorithm that was widely used. By looking at healthcare costs, it could tell which patients needed more help. What is the problem? Black patients with the same health needs had to pay less because they didn’t have the same access to care. The algorithm incorrectly determined that healthier white individuals required more care than sicker black individuals.

There were gaps in governance when the design was made. Teams didn’t question the assumptions that were built into the training data. Clinical supervision remained restricted. There was never any continuous validation against real outcomes. The outcome was inequitable care provision influenced by automated bias. This case shows how AI governance failures in healthcare can make systemic inequalities worse.

4. Clearview AI’s Unauthorized Facial Recognition

Clearview AI made a facial recognition system by taking more than 3 billion photos from social media and websites. People never said it was okay. Most people didn’t even know the business was there. After that, Clearview sold access to this database to government departments and private groups.

The main issue was how to run things. Clearview didn’t have a way to manage data, a way to get consent, or real privacy protections. The company gathered very private biometric information without any clear rules or limits on how it could be used.

The effect was very bad. Clearview’s technology was banned or limited by regulators in several countries. The company had to pay $7.5 million in fines and is still fighting several lawsuits in the US and Europe.

5. Cambridge Analytica Weaponized Facebook Data

Cambridge Analytica used data from almost 87 million Facebook users to make psychological profiles that they could use to target voters. Most users never permitted for this use. Facebook let third-party apps access user data, but it didn’t keep an eye on how that data was used after that.

The inability to control third-party data created a governance gap. Once Facebook granted partners access, Facebook was remiss in confirming that the partners followed the limits of access set by them. Additionally, there were no restrictions on the flow of Facebook data, and Facebook could not see how a partner was using Facebook data.

The effect was felt all over the world. Governments were worried about how elections were being rigged. People no longer trust Facebook. The company was fined $5 billion for breaking privacy laws, which is one of the biggest fines in tech history.

6. Tesla Autopilot Safety Failures

In 2024, there were 13 recorded accidents involving Tesla automotive, which resulted in multiple fatalities. Many drivers believed the system could be operated as a totally autonomous vehicle, and the difficulty of keeping drivers focused on the road when using the Autopilot System presented adequate risks.

The inability to govern the Autopilot is due to the lack of accurate information regarding the capabilities of the system, as suggested by the misleading name “Autopilot” and general inadequate governance. Driver monitoring systems didn’t always catch when someone wasn’t paying attention. Safety testing didn’t take into account how drivers act in real life.

The effect was bad. There were many deaths. Investigations were started by US regulators. Lawsuits came next, putting Tesla’s safety claims to the test.

7. Boeing 737 MAX MCAS System Failure

Boeing’s Maneuvering Characteristics Augmentation System (MCAS) could automatically lower the nose of the plane based on data from just one sensor. The pilots didn’t know everything about the system, and they weren’t trained well enough to know what to do when it broke down.

There was a big problem with governance. Boeing didn’t do enough testing and documentation and didn’t make it clear how MCAS worked in real life. Pilots didn’t know when or why the system would take over manual controls.

The effect was terrible. Two crashes killed 346 people around the world. Worldwide, aviation authorities grounded the 737 MAX fleet. Boeing had to pay $2.5 billion in fines and lost trust for a long time. This is still one of the worst AI governance failures in aviation history.

8. Waymo Autonomous Vehicle Recall (2024–2025)

Waymo called back 1,212 self-driving cars because its AI couldn’t see thin or hanging things like chains, gates, and utility poles. The cars crashed into things that a human driver would easily see and avoid.

The governance gap was caused by not enough testing of edge cases and pressure to deploy. The system worked well in controlled settings, but it didn’t work well in real-life situations that weren’t seen as high-risk.

At least seven crashes were reported as a result, and Waymo’s reputation as a safety-first self-driving car company took a hit.

9. Knight Capital Trading Algorithm Failure

Knight Capital put out new trading software that hadn’t been properly tested. Once it was up and running, the system started making millions of trades that weren’t planned within minutes. The company lost $440 million in just 45 minutes, which caused some parts of the US stock market to become unstable.

The failure of governance was simple but serious. Code that hadn’t been tested made it to production. There was no way to roll back. There were no safety measures in place to stop strange behavior.

The effect almost killed the business. Knight Capital needed emergency money and then sold itself to a competitor to stay in business.

10. Apple Card Gender Discrimination Case

Apple Card’s credit algorithm gave women with the same financial profiles lower credit limits than men. In one well-known case, the wife of entrepreneur David Heinemeier Hansson was given a credit limit that was 20 times lower than her husband’s, even though they shared finances.

The governance gap was caused by algorithms that weren’t clear and didn’t test for bias. Apple and its partners couldn’t explain how the model made choices or show that it was fair to all applicants.

The effects included an investigation by the government, public outrage, and claims of gender discrimination.

11. Facebook AI Failed to Catch Hate Speech

Facebook used a lot of AI to keep an eye on a lot of content. The system often took down posts that weren’t harmful but missed real hate speech, false information, and calls for violence. It had a hard time understanding context, sarcasm, and cultural differences.

The failure of governance was due to biased training data and poor human oversight. Facebook automated things faster than it could review them. Moderators only stepped in after damage had already been done.

The effect was bad. Researchers found that hate speech and false information that wasn’t checked led to violence in some places. Facebook’s reputation took a hit around the world.

12. YouTube Recommendation Engine Promoted Extremism

YouTube’s recommendation algorithm is optimized for engagement. It was found that content that was controversial or extreme made people stay on longer. Over time, the system actively pushed users toward more extreme content.

The governance gap was planned. YouTube picked the wrong way to measure success. Time spent watching was more important than user safety. There were still weak or no ethical guardrails.

The effects included boycotts by advertisers, public anger, and government scrutiny of online radicalization.

13. Google AI Overviews Gave Dangerous Advice (2024)

Google put AI-generated answers at the top of search results. The system gave out ridiculous and dangerous advice with confidence. It said to put glue on pizza, eat rocks to stay healthy, and believe online jokes as truth.

The failure of governance was moving too quickly to launch without checking. There was no layer for checking facts. The system couldn’t tell the difference between real advice and jokes. The effects were public ridicule, loss of trust, and the removal of features.

14. McDonald’s AI Drive-Thru Failure (2024)

More than 100 McDonald’s locations tried out AI voice ordering. The system often got customers’ requests wrong. It added things that people didn’t order, like hundreds of Chicken McNuggets and bacon on ice cream.

The governance gap was a lack of real-world testing and an easy way to get around it. In demos, the AI worked, but it didn’t work in places that were loud and fast-paced.

The result was unhappy customers, viral videos, bad press, and the program shutting down in June 2024.

15. NYC MyCity Chatbot Gave Illegal Advice (2024)

The MyCity chatbot was created by New York City to help small business owners. It gave bad advice instead. It made it sound like employers could take tips, ignore complaints of harassment, and serve food that wasn’t safe.

There was a big gap in governance. The city started using the tool without checking it out legally or testing it enough. The chatbot worked without any safety measures for following the rules.

The effect could have caused companies to break the law. People in the city were upset with it, and it lost credibility. The chatbot was still operating despite several difficulties.

Major Risks Caused by AI Governance Failures

AI technologies that do not have proper AI governance expose businesses to more than just technical problems. The mistakes that come from ineffective AI governance create tangible risks for a business and will only become worse if left unaddressed.

Increased Risk of Regulatory and Legal Exposure

AI technologies that produce decisions that create harm or unfairness are expected to be scrutinized by regulators. Regulators now require companies to keep documentation of controls, audits, and accountability for their actions. When organizations have weak governance, they can’t explain their decisions or prove that they are following the rules. This can lead to fines, lawsuits, and forced system shutdowns.

Reputational damage spreads faster than recovery

People lose faith when AI governance failures become visible. One bad event can make the news, cause a social backlash, and hurt a brand’s reputation for good. Governance failures often become public knowledge before the people who are supposed to know what went wrong do.

Operational instability disrupts core functions

When AI models drift or break, they can make operations less stable by changing prices, hiring, making predictions, or providing customer service. If AI governance monitoring isn’t done well, teams don’t react quickly enough, which can lead to problems in the supply chain, staffing shortages, or losing customers.

Ethical harm undermines long-term adoption

Unfair or unclear AI results have a bigger impact on vulnerable groups. These harms make employees less confident, customers less likely to accept, and partners less likely to trust. Even when technology gets better over time, ethical failures make people less likely to use it.

Financial losses compound quietly

Wasted expenditures, depletion of resources, and delays in developing new products occur after an AI project fails. Due to delayed recognition of lost revenue, recovery from a failed project’s performance takes months and costs more than what was anticipated.

Key Lessons Learned From AI Governance Failures

The failures of many AI projects are a clear indication of the pattern that all organisations must now identify. Lessons learned from these projects apply to leaders from all business areas.

Start With Governance Before Building

Many organisations see governance as a post-launch task and continue to make the same mistakes repeatedly. Before building any product, AI development teams must create a governance structure that encompasses definitions of acceptable use, levels of risk, and who has ultimate accountability for AI projects. Decisions made early on about data sources and goals have an effect on results long before models are put into use. To avoid the consequences of AI governance failures, you must build governance infrastructure before developing your AI.

Continuous oversight matters more than perfect models

Once systems are used by real people and in different situations, even the best-trained ones start to drift. Strong AI governance monitoring focuses on checking for bias, performance over time, and triggers for escalation. AI governance monitoring can’t rely on problems or complaints to start.

Clear ownership prevents decision paralysis

Every AI system needs a person in charge who can step in when needed. When everyone is responsible, no one is really responsible. When systems fail or cause harm, clearly defined AI governance responsibilities make sure that responses are faster.

Leadership involvement sets the tone

For AI governance to work well, executives need to be involved, not just delegate. When leaders treat AI governance like money or security, their teams do the same. Governance frameworks only work when senior people are held accountable.

Human judgment must remain in the loop

AI should help people make decisions, not take away their responsibility. Companies that keep human override systems recover from failure more quickly. Human review guards against edge cases that automated systems can’t see coming.

Final Thoughts

AI failures don’t often happen because people are trying to do bad things or because teams are careless. When companies don’t realize how much discipline AI systems need after they are put into use, these problems happen. AI governance failures show problems with ownership, oversight, and leadership judgment.

The examples from healthcare, finance, hiring, supply chains, and public services all show the same thing. AI systems make decisions faster than people can respond. When there isn’t clear governance, little mistakes can cause big problems. AI governance monitoring fails. Accountability becomes less clear. Trust diminishes.

Good AI governance doesn’t stop new ideas from coming up. It keeps it safe. Companies that define ownership early, put money into ongoing monitoring, and include leadership in oversight build systems that change instead of breaking down. Governance makes AI a useful business tool instead of a risk multiplier.

FAQs

What are AI governance failures?

When companies use or run AI systems without clear rules, accountability, or oversight, AI governance failures occur. These mistakes often lead to decisions that are unfair, unsafe results, breaking the law, or losing the public’s trust. The problem is with weak governance structures, not with AI technology itself.

Why do AI governance failures happen so often?

A lot of companies put speed and new ideas ahead of control. After deployment, it’s not clear who owns what. Monitoring is still reactive. Leaders don’t think about long-term risk enough. Without clear AI governance responsibilities, systems drift until problems become public.

What risks do AI governance failures create?

Legal exposure, damage to reputation, ethical violation, instability in operations, and loss of revenue result from AI governance failure. Poor governance results in extremely rapid proliferation of errors since AI operates autonomously until an organization is able to address an error.

How can organisations prevent AI governance failures?

Organisations must embed governance across the AI lifecycle. Clear ownership, continuous monitoring, AI governance leadership involvement, ethical review through AI ethics and governance frameworks, and human oversight reduce risk. Treating AI governance like financial or cybersecurity control makes prevention practical and sustainable.