Nvidia Launches Vera Rubin AI Platform at CES 2026

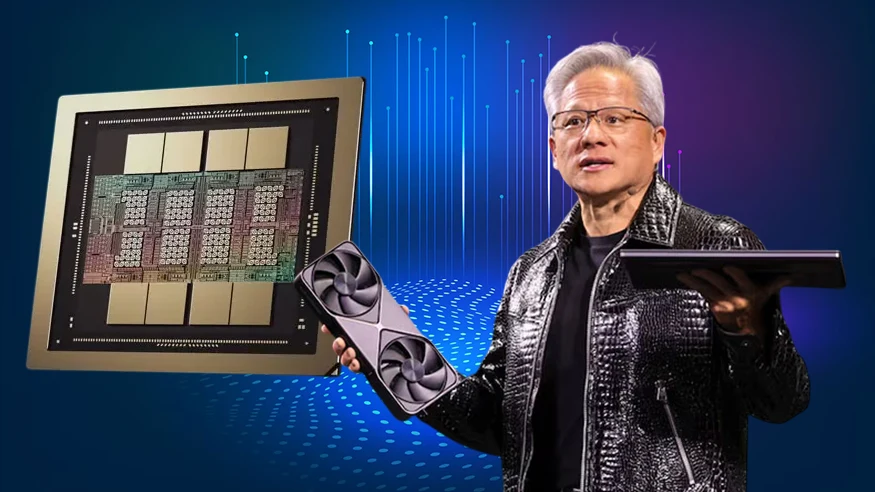

Nvidia, an AI giant, revealed its next-generation Vera Rubin superchip on Monday at CES 2026 in Las Vegas. Vera Rubin, one of the six chips that comprise what Nvidia is currently marketing as its Rubin platform, is a unified processor consisting of one Vera CPU and two Rubin GPUs.

Nvidia is positioning the Rubin platform as uniquely suited to agentic AI, advanced reasoning models, and mixture-of-experts (MoE) models, a type of machine combining a chain of so-called expert AI and directing queries to the relevant one based on the query posed by a user.

The Nvidia CEO Jensen Huang, in a statement, claimed that AI computing is in a state of skyrocketing demand, whether in training or inference.

Rubin is making a giant leap to the next frontier of AI, with an annual cadence of bringing a new generation of AI supercomputers and extreme codesign – 6 new chips – extreme codesign.

Besides the Vera CPU and Rubin GPUs, there are four more networking and storage chips in the Rubin platform: the Nvidia NVLink 6 Switch, Nvidia ConnectX-9 SuperNIC, Nvidia BlueField-4 DPU and Nvidia Spectrum-6 Ethernet Switch.

Then that can be bundled into the Vera Rubin NVL72 server by Nvidia, which has 72 GPUs built into one unit. Connect a few NVL72s and you have what is known as the DGX SuperPOD of Nvidia, a sort of a giant AI computer. These giant systems are what hyperscalers such as Microsoft, Google, Amazon and social media giant Meta are spending billions of dollars to have.

Nvidia is also boasting of its AI storage (Nvidia Inference Context Memory Storage) that, as the company claims, is required to store and share the data produced by trillion-parameter and multi-step reasoning artificial intelligence systems.

All this will serve to make the Rubin platform more efficient than the earlier generation Grace Blackwell product that Nvidia offered.

The company states that with Rubin, the number of GPUs required to train an equivalent MoE will be reduced 4x compared to Blackwell systems.

By reducing the number of GPUs, the companies would rather use the spare units in other activities that enhance efficiency. According to Nvidia, Rubin is also offering a 10x inference token cost reduction.

The components of AI models are tokens that are denoted by words, sentence components, images, and videos. These concepts are tokenised into smaller and easy-to-process pieces using tokens by models.

Processing tokens is, however, a resource-intensive and hence energy-hungry process, particularly when operating with huge AI models. Reduction in the price of tokens would offer better total cost of ownership to Rubin than its predecessors.

Nvidia claims that it has been sampling the Rubin platform with its partners and is in volume production.

The chip has positioned Nvidia as the largest corporation in the world based on its market capitalisation of approximately $4.6 billion. Its market cap grew over $5 trillion in October, but worries about spending on AI and ongoing worries about the emergence of an AI ecosystem bubble hit its value down to its present-day value.

More competition is also posed to the company by its competitors, AMD, which is introducing their own Helios rack-scale systems to compete with NVLink 72, and its own customers.