How Reinforcement Learning Is Shaping the Future of Autonomous Systems?

Reinforcement learning is pushing the boundaries of what machines can do, especially in autonomous systems in 2025. It is said to be one of the most fascinating subfields of AI.

Imagine a car that teaches itself how to drive better every single day, not because it’s been programmed to follow rules, but because it learns from its mistakes and improves every time it acts. That’s what the promise of reinforcement learning is in the autonomous world.

A McKinsey report shows that the autonomous driving systems could create $300 billion to $400 billion in revenue by 2035. This reflects on how businesses are building systems that can make decisions independently.

Whether it’s drones, self-driving cars, or delivery robots, they can make smart decisions in real-world environments. They will not rely on human-coded rules; these systems will improve continuously through a structured learning process.

With this being said, let’s move on and understand what RL is, its benefits, and everything related to reinforcement learning in autonomous systems.

What is Reinforcement Learning?

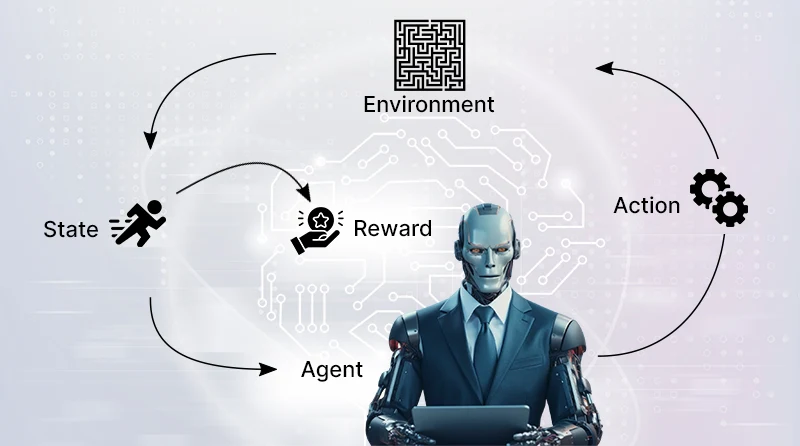

Reinforcement learning is a subset of machine learning that requires an RL agent to learn to make improved choices by interacting with its environment. It does not depend on a fixed set of data, such as supervised learning and unsupervised learning.

Instead, the agent adapts to trial and error in a never-ending loop of activity, where it carries out actions, gets feedback, and modifies its reinforcement learning policies so that, as time passes, it can gain increased rewards.

Under this learning, an action performed by the agent produces a reward signal or a penalty. These are the indicators that are used to direct the agent to reach a better cumulative reward, which in turn enables the agent to identify an optimal policy- a set of strategies that would yield optimal results under specified circumstances.

The mathematics behind RL is a Markov Decision Process (MDP)

A model that establishes the potential states, actions, and rewards to allow the agent to estimate future states and choose the appropriate action.

You’ll also hear about the value functions, policy gradient methods, and temporal difference learning, which all shape how agents make progress and refine their decision-making skills.

An agent of RL can be viewed as a curious explorer; it experiments and fails and learns, and gets better. The smarter it becomes, the greater its reward function and feedback loop successful in successfully adapting to complex environments.

This process typically involves:

- State (s) that are the current situation.

- Action (a) is the decision the agent makes.

- Reward (r), which is feedback from the environment based on the action.

- Policy (π) is a strategy that the agent follows.

- The value function (V) is the expected reward from a state.

- Q function (Q), which is the expected reward from a state-action pair.

These systems do not simply obey instructions, but over time, they acquire the best way to behave. We hope you understand what RL is. Now it’s time to explore how reinforcement learning is revolutionizing autonomous systems.

How Reinforcement Learning is Revolutionizing Autonomous Systems?

Until now, you have understood that RL is a powerful method that helps artificial intelligence systems to achieve optimal results in unseen environments.

Or, we can say that reinforcement learning is transforming simple programmed machines into sophisticated problem-solvers.

It’s true that the conventional practices are failing to meet the dynamic environments. So, how is reinforcement learning revolutionizing autonomous systems?

Understand by an example, consider a self-driving car facing a fallen tree branch that was not in its original programming. The RL intervenes and allows that car to have new strategies and evolves to live in complex environments through trial and error, feedback loops- no manual code is needed.

It’s impressive how RL algorithms let autonomous systems continuously improve agent performance, even if the environment responds unexpectedly.

Now take a look at how RL is making the biggest changes in autonomous systems:

Smarter decision-making:

Reinforcement learning (RL) is used to enable self-driving cars, warehouse robots, and drones to choose their next course of action. They do not follow some predetermined code but acquire knowledge based on trial and error to make smart decisions in real time.

How it works:

- The RL agent engages with the environment.

- The agent monitors the existing state of affairs, acts, and is rewarded.

- Gradually, it will come to learn how to maximize its cumulative reward and enhance the performance of its agent.

Adjustment to changing situations:

Contrary to supervised and unsupervised learning, RL can flourish in unpredictable, real-world conditions. It enables autonomous systems to change to meet varying conditions by interacting with the environment continuously and not by relying on predetermined input data.

Deep reinforcement learning in action:

Integrates RL and deep neural networks. It helps robots interpret the state space and execute fine motions in addition to processing sensory inputs.

Allows robot learning in complex environments, like the example of a drone flying over obstacles in the air or a robot delivery carrier evading the crowd on the street.

Striking the right balance between exploration and exploitation:

The exploration-exploitation trade-off has to be managed by any RL system. It is important to understand when to explore with new strategies and when to exploit with those that have already been tested.

Advanced learning methods:

Actor-critic methods and policy gradient methods assist the agent in identifying the best policies that improve with time.

These RL models can also direct autonomous agents to decide in nearly-human ways, avoiding the occurrence of unintended behaviors.

Constant learning and recognition of patterns:

The RL-driven systems, with the help of the continuous training processes, may determine the patterns, predict the future states, and modify the actions of the agent according to the needs. Additionally, the industries that incorporate the reinforcement learning algorithms have made significant breakthroughs in:

- Self-driving (optimization of speeds)

- Precision control (robotic surgery).

- Smart logistics (real-time resource distribution)

Key Benefits of Using Reinforcement Learning in Autonomous Systems

Reinforcement learning (RL) has certain potent benefits in autonomous systems that could not be matched by the conventional programming framework. That is why RL is the choice to create smarter and more flexible machines:

1. Adaptability to Dynamic Environments

Until now, we know that RL-driven systems are good when there is a change or uncertainty in the environment.

Autonomous systems are more robust and reliable than ones that operate based on a set of rigid rules. They are capable of modifying their behaviour in response to new environments through continuous learning via experience and feedback mechanisms.

2. Continuous Improvement

RL systems are designed to be improved over time as they are not fixed programs, contrary to fixed-program automation. This learning increases the quality of decision-making and which leads to increased performance and safety each time.

3. Less Reliance on Labeled Data

RL agents do not rely on large volumes of labeled training data on which they learn, but instead, they learn through interaction.

This is likely to be costly and time-consuming to create. This renders RL feasible to the real world, such as robotics or autonomous driving, where it is difficult to obtain labeled data.

4. Solving high-dimensional and complex problems

RL algorithms will be useful in tackling high-dimensional and complex problems because they explore and exploit a wide range of strategies.

That is why it is applied in advanced robotics, games, and autonomous navigation, in which a variety of strategies are necessary.

5. Scalability and Generalization

RL systems demonstrate an incredible skill to apply learned information to other tasks that are similar but different, without having to be retrained from scratch.

This feature will allow using the same reinforcement learning policies in the widest variety of real-life applications, cutting down the period and expenses of deployment.

6. Real-Time Decision Making

Due to the ability of RL to be constantly learned and provide feedback, autonomous systems can respond instantly to any new input or disturbance.

This enables them to be effective in activities that require immediate answers, like driving in traffic or robot control.

Challenges of Implementing RL in Autonomous Systems

RL is a powerful method of developing autonomous vehicles, but there are several challenges that emerge when it’s practically applied in the field. Let’s explore the major challenges engineers and researchers face while working on the RL models.

Bias in Data

Data bias is one of the major problems of RL in autonomous systems. The failure of an RL agent to generalize in dynamic conditions, such as congested cities or bad weather, happens when the agent is trained on limited or one-dimensional data, such as highway data. This loophole usually results in unintended behavior once the models are exposed to unseen situations.

Solution: To overcome this situation, use heterogeneous, real-world data or more advanced simulation models that simulate complex situations like night-driving, fog, or unforeseen obstacles.

Safety and Risk Management

The conventional RL algorithms are successful on a trial-and-error basis, but that becomes dangerous in terms of safety. A slight mistake in real-life traffic can have disastrous results.

Scholars are currently proposing safe reinforcement learning (SRL) and reward functions with explicit safety restrictions.

Solution: As an example, Wayve autonomous prototypes employ risk-aware policies that make vehicles keep a safe distance even during learning. However, ensuring safety in all possible situations is an enormous challenge.

Computational Complexity

Deep reinforcement learning models require enormous computing power and time. There are millions of interactions between the RL agent and the virtual environment in every single training process. This renders experimentation tedious and resource-intensive.

Such methods as transfer learning and dynamic programming have the potential to decrease the amount of training time, but balancing between computation efficiency and model accuracy is challenging.

Solution: Smaller, power-efficient designs are currently being explored to be applied in real-world scenarios where onboard hardware is constrained.

Integration with the Existing Vehicle Systems.

The RL-based control systems have to coexist with existing conventional means, such as traction control or braking assistance, already installed in cars. In some cases, the policy gradient techniques of RL may propose an action that is inconsistent with these pre-programmed systems.

Solution: To manage this situation, engineers are constructing hybrid systems that integrate the flexibility of RL and the consistency of rule-based frameworks. An example is Tesla with layered learning models, where reinforcement learning policies are not competing with core safety functions.

Real-World Unpredictability

Unlike controlled simulations, a real-world environment is unpredictable. Such issues as human drivers, sensor noise, or road construction add noise that RL algorithms find hard to interpret properly. Such unpredictability influences the performance of the agent and makes it more difficult to act optimally.

Solution: To overcome this situation, researchers are working on adaptive learning models that continuously update on the basis of live feedback. This will help the autonomous agents to handle sudden changes without losing accuracy and stability.

Ethical and Regulatory Barriers.

Lastly, the usage of RL in autonomous systems is subject to ethical and compliance issues. Who will hold the agent of an RL accountable for a wrong decision? What can you do to make sure that the reward signals encourage safe and just desired behaviors and not shortcuts?

Solution: Governments and developers are striving to develop unified models of ethical AI, which can be used to maintain transparency in decision-making without jeopardizing the overall public trust.

Real-World Applications of RL in Autonomous Systems

Now, we will explore how RL-driven autonomy is changing operations in real-world applications. Take a look.

Autonomous Vehicles (AVs)

Reinforcement Learning will allow autonomous vehicles to make immediate driving choices based on simulations and real-world experience.

It assists in lane changing, avoidance of obstacles, and traffic forecasting. Waymo trains its self-driving cars to navigate the complex traffic situation with the help of RL.

Drones and UAVs

With RL, drones can determine the best flight routes, adapt to the dynamics of the wind, and save energy when performing missions. They include smart systems that are capable of managing deliveries, inspections, search-and-rescue missions, etc.

The RL-inspired algorithms applied by Amazon Prime Air are for route optimization and safe delivery of packages.

Robotics

RL finds application in robotics to educate complex robots, such as pickers, assemblers, and surgical robots. Robots keep on evolving with trial and error, increasing their accuracy and flexibility.

The Spot robot by Boston Dynamics is an RL-controlled robot that responds by moving and moving and executing movements that involve dynamic actions, such as opening the door or climbing the stairs.

Autonomous Maritime Systems.

In the marine industry, such as ships and submarines use RL to plan routes efficiently as well as to avoid collisions in unpredictable marine environments. It guarantees fuel efficiency and accident prevention during deep-sea or port operations.

The autonomous ships project developed by Rolls-Royce uses RL to streamline the routes on the sea and cut operational risks.

Smart Grids & Energy Systems

With RL, the load of electricity is balanced, waste of power and renewable energy storage are minimized. These systems are responsive to real-time changing energy requirements.

DeepMind (Google) used RL on the cooling systems of its data centers to reduce energy usage by more than 40%.

Aerospace & Space Exploration.

The application of RL in aerospace is used to make autonomous navigation, satellite navigation, and landing decisions in space on unknown terrains. It assists spacecraft in adapting to dynamic conditions without human intervention.

Mars Rover at NASA is an example of an RL in obstacle avoidance and path planning on rough surfaces on the Martian surface.

Healthcare Automation

RX improves robotic surgery, optimized treatment, and real-time patient monitoring. Systems are trained to modify procedures such as medication dosing in accordance with patient reactions. IBM Watson Health tests RL-driven decision systems to recommend cancer treatment.

Industrial Process Control

RL enables factories to automate their processes, plan maintenance, and avoid expensive downtimes. It enhances learning in machines and the prediction of workflow.

Siemens utilizes RL in its manufacturing facilities for predictive maintenance and process optimization in real time.

The Future of Reinforcement Learning in Autonomous Systems

Reinforcement learning (RL) has a promising and transformative future in autonomous systems. As the efficiency and sophistication of the RL algorithms increase, predict autonomous systems to get even smarter, safer, and more versatile.

“The integration of reinforcement learning in autonomous vehicles represents a significant leap forward, but it’s clear that we’re still in the early stages of this technology’s potential.”

Dr. Huei Peng, Director of Mcity at the University of Michigan

We are moving into a world where RL-controlled agents will act smoothly in increasingly complex worlds and perform, adapting to new challenges with the help of a little human supervision.

Moreover, the newer developments are the integration of deep reinforcement learning with transfer learning, which can be used to accelerate the learning process in various environments.

It implies that autonomous systems will have the possibility to use the knowledge acquired in one situation in another, saving time until the deployment and increasing performance.

What we can expect:

More Intelligent and adaptive Autonomous Agents.

Autonomous learning will enable reinforcement learning, which will enable autonomous agents to adapt to changing complex environments more rapidly than before.

The agents will not only learn through direct feedback but will also predict the future states and interactions with other agents. Like, a self-driving fleet of the future would avoid traffic jams or accidents by knowing when they are going to occur.

Deep Neural Networks Integration.

Integrating RL and deep neural networks will result in deep reinforcement learning systems that can process high-dimensional input data such as images, audio, and sensor data. This opens opportunities for learning about robots in unpredictable environments.

RL + deep neural networks, drones would be able to overcome storms or thick forests on their own.

Learning in Dynamically Changing Environments.

The next generation of RL systems will be able to work in the real world and adapt to any changes in real time. They will arrive at immediate decisions despite the occurrence of new variables.

Real-time rerouting of deliveries within a warehouse could be done using warehouse robots in the event of aisles being blocked or changes in inventory.

Multi-Agent Collaboration

The RL will be developed to accommodate several independent agents. These agents have the capacity to acquire the experiences of one another, enhancing efficiency and coordination in complicated surroundings.

Take an example of Swarm robotics in agriculture would be able to coordinate planting, monitoring, and harvesting crops without humans.

Less Reliance on Simulations.

Subsequent RL systems will have less need for a priori training in simulations. By using a sophisticated policy gradient approach and actor-critic approach, the agents will safely learn in the real world, minimizing the difference between simulated and real performance.

Delivery drones would be able to learn the most efficient flight paths directly over urban airspace and prevent crashes.

Ethical and Safety Concerns Around RL in Autonomous Systems

RL in autonomous systems raises some ethical and safety concerns, which are primarily related to bias, safety, and accountability. As we understood that RL systems learn by interaction with their surrounding, if not designed carefully, the results are harmful.

Let’s talk about some ethical and safety-related concerns in more depth:

The Absence of Transparency

RL models can be considered black boxes, so it is hard to justify or explain the decisions, which creates concerns of accountability when using them during critical tasks such as health care or autonomous vehicles.

Unintended Behaviors

Reward functions may be poorly designed, which causes agents to take advantage of loopholes, leading to harmful or unethical behavior that maximizes the reward but breaches safety or societal norms.

Exploration Risks

RL agents may adopt risky or erratic behavior during training or deployment due to striking a balance between exploration and exploitation of known strategies.

Bias in Decision-Making

RL systems are trained on past or biased data, which they can replicate, favoring bias in their results and causing discrimination in hiring, finance, or law enforcement.

Safety and Reliability

It is also important that RL agents be guaranteed to be safe in uncommon or untested situations, particularly in situations involving human lives or critical infrastructure.

Legal and Ethical Accountability

Legal frameworks are still lagging behind the technological progress on the issue of who is liable in case of autonomous systems failure, and the legal frameworks are still evolving.

Manipulation Risks

RL across social or commercial fields can take advantage of the human mind, e.g., by encouraging addictive strategies in social media algorithms.

Ethical Development Practices

Formal verification, ethical guidelines, and a continuous audit can be used to alleviate risks and ensure that RL systems are aligned with societal values.

Conclusion: The Road Ahead for Reinforcement Learning and Autonomous Systems

The revolution of autonomous systems is led by reinforcement learning (RL).

In 2025, when advanced RL algorithms are developed, the learning process will become quicker, and the generalization will become better. They can be applied to such critical spheres as transportation, robotics, and medical care with a higher level of security.

Integration of deep reinforcement learning with recent AI algorithms, such as transfer learning and multi-agent systems, will improve the applicability of RL.

And the true game changer will be collaboration, as researchers state that soon, the autonomous vehicles will be able to share their learned experiences. This will lead to more safer and efficient fleet of vehicles.

Additionally, the autonomous agents will be capable of making split-second decisions, less data will be required to learn, and more effectively adapt to new conditions.

Lastly, safety and ethical issues will determine the future of RL, and new advances to uncertainty-sensitive algorithms and formal verification will ensure that autonomous systems become responsible and predictable.

FAQ’s

Could RL-based autonomous systems be safe?

Complete safety has yet to be achieved. Safe reinforcement learning (SRL) methods are currently being developed by researchers whose components incorporate constraints or safety shields into the training process to ensure that the agent does not take risky actions.

What are the major challenges of implementing RL?

The application of RL to autonomous systems is associated with such issues:

- How to design a correct reward function

- How to deal with unwanted behaviors

- How much resource-consuming computation is needed

- How to be safe in a dynamic environment

Introduction of simulations to the real-world deployment and management of ethical issues is also a significant challenge.

Can RL improve efficiency in real life?

Yes. The use of RL has been able to maximize decisions, enhance learning in robots, as well as enhance efficiency in autonomous systems. Warehouse logistics, energy management, and autonomous driving are examples of such a system where RL agents can adjust to new circumstances, find new patterns, and make optimal solutions feasible in the long term.

How will RL be used in the future in autonomous systems?

Smarter RL agents, deep reinforcement learning, real-time adaptation to complex environments, and multi-agent collaboration are all in the future. The systems will need less simulated training, learn to operate safely in the real world, and keep on improving the performance of the agent, while addressing the ethical and safety issues.

What is the difference between RL and supervised learning?

In supervised learning, an agent is guided by labeled datasets; RL agents are taught by interacting with the environment and making trial and error. They enhance their behaviors upon the cues of rewards, rather than label data, which is best suited to uncertain and dynamic conditions in which conventional approaches are likely to collapse.

Which types of RL algorithms apply in autonomous systems?

Deep reinforcement learning systems that integrate deep neural networks and classic RL techniques are common in autonomous systems.

Popular algorithms are Q-learning, policy gradient algorithms (such as PPO), actor-critic algorithms, and model-based RL.

What is the biggest challenge that prevents the application of RL to real-life autonomous systems?

Safe reinforcement learning is one of the key challenges. The real world is noisy, unpredictable, and lacks forgiveness for errors. Algorithms are frequently data-intensive and cannot easily be used to generalize simulations to real environments.